What Is Nvidia PhysX, What Does It Do?

What does PhysX have to do with it?

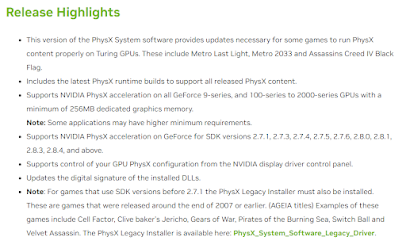

PhysX is a physics Engine that was acquired by NVIDIA in 2008 and has since been further developed. The aim was to make the PhysX Code work on its own “Compute Unified Device Architecture” (CUDA). In concrete terms, this means that the physics Engine can be run on all current and future NVIDIA graphics cards.

In early games such as Cryostasis and Mirror’s Edge, this provided physically inspired fluid and fabric simulation, as well as interactive smoke, shattering glass panes and debris effects. You didn’t necessarily need GeForce Hardware for this, because PhysX can also be run on the CPU-but games became a slideshow as a result. At that time, there was criticism from the competition that the software implementation was intentionally inefficient and thus several Performance problems were later revealed by a detailed analysis of Real world Technologies. These are now fixed in newer versions of the Engine.

It even works so well on the CPU that games have been relying exclusively on the processor for the PhysX simulations for a few years and no longer allow hardware acceleration at all. This is probably partly due to the fact that only the source code for CPU-based PhysX has been released and so it can also be found in this Form in the Unity and Unreal Engine. The technology may therefore continue to exist, with the”PhysX is dead” statement I am explicitly referring to the extinction of the CUDA implementation.

1. Performance

After reading the previous section, one might wonder why such a big Drama about the supposed Dead is being made by PhysX, when it has only moved from the GPU to the CPU. The crux of the matter, however, is that when changing the Used effect complexity was drastically reduced. Borderlands 2 had probably the best use of PhysX. In earlier and later titles, many of the gimmicks often looked too set-up, while in Borderlands it matched the Comic style and wacky gun Action perfectly.

If you look at the latest part, you will miss the most. Since the Unreal Engine 4 is used, PhysX on the processor should still be in use, especially since in the graphic video of Candyland you can recognize some substances blowing in the Wind and interactive explosive particles – nevertheless a joke compared to before. As already mentioned, the fate of Borderlands 3 is no longer a result of a lack of CPU optimization, the technology is now only used more sensibly-that is, for less complex effects.

It’s not as if titles at that time ran smoothly despite CUDA Hardware. In the aforementioned Cryostasis, the minimum fps had tripled with PhysX, in Batman: Arkham Asylum, the average frame rate had already dropped by half with PhysX to medium-this is very reminiscent of RTX. The respective graphics effects are simply not worth the effort for what they deliver visually. Take a look at this year’s Metro Exodus; while in the predecessor Last Light particles flew out of bullet holes, bounced off objects and rolled around, they simply disappear into the ground six years later. A grid for collision detection is already available, so” it just works ” would have been in it for PhysX. But one simply renounces it in favor of the Performance – despite here even “real” CUDA-PhysX. The same applies to textiles (2013 vs 2019).

By the way, if Exodus hadn’t been released, then the “latest” games that supported PhysX via GPU would be the flopped Bombshell released in early 2016, as well as the more than four – year-old Batman: Arkham Knight-even if the disastrous Performance wasn’t only due to NVIDIA’s cooperation.

Otherwise, PhysX was never used in games for collapsing houses or really striking destruction effects, as you know from Battlefield 3 or Red Faction: Guerrilla, but instead usually only provided for smaller particle effects. As soon as NVIDIA was no longer interested in using PhysX as an advertising platform, the technology was only used in such a limited framework, which makes sense if performance is taken into account. Since RTX is often seen as a big reason to buy, it seems logical that this fate could also affect RTX, especially since one sees again that the performance is not enough to be used in a larger frame – at least not with current cards.

2. Limiting Marketing Tool

The importance of marketing is actually a bigger issue than you think. Brand loyalty is very important for a company and NVIDIA strives for it by all means. The GeForce Partner Program (GPP), G-Sync modules or “the Way it’s Meant to be Played” and GameWorks would not have existed otherwise. In addition, you quickly realize that something is an advertising Gimmick when the Demonstration is worked with unclean comparisons. In Arkham Asylum, for example, complete flags disappear when PhysX is not on hold, instead of simply replacing them with static models. The same applies to RTX, which was introduced in Battlefield V as a “cure” for Screenspace Reflections, but Planar Reflections or dynamic Cube Maps would be more performant and would even look altogether more chic than RTX.

In hindsight, it almost seems as if the graphic effects were only conspicuously staged if they were to be advertised in large numbers. To Metro: Last Light there was a comparison video directly from NVIDIA about PhysX. At some point, however, this doesn’t seem to have thrilled people as much or they needed a counter on AMD’s TressFX hair simulation. In any case, in The Witcher 3 only GameWorks was mentioned and although PhysX remained at least CPU-based in use, it was not mentioned by name in the official demonstration clip. To the joke-PhysX, but also to HairWorks in Metro Exodus there was then no Video.

But why, according to hardware surveys, pretty much everyone has a GeForce in use anyway, since you prefer to advertise the new RTX in Videos, this at least provides as a purchase incentive. A for-profit company is allowed to do this, but Battlefield 5 and control have no GameWorks effects besides RTX and Shadow of the Tomb Raider even uses Pure Hair, the further development of TressFXs by Eidos Montreal, instead of HairWorks. From PhysX we don’t even start here, but does this mean that RTX will disappear as soon as all cards support it?

In the Reboot trilogy Lara is on the move with modern hair.

The poor Performance with the apparent compulsion to use the technologies prominently and as an almost pure advertising tool, naturally ensures that no reasonable developer would voluntarily integrate such a thing into his game or his graphics Engine. Instead, NVIDIA must always first approach a potential partner as a Sponsor. As such a partnership creates costs, this resulted in comparatively few titles with PhysX over the years. RTX seems to be no exception after current development, although it runs through Microsoft’s “DirectX Raytracing” (DXR) and thus does not require any special license agreements for developers, unlike GPU-PhysX.

Here the hope is expressed that Raytracing might get a boost soon. Hardware-accelerated PhysX was exclusively reserved for GeForce cards, as NVIDIA did not want to share their purchased technology. Thanks to DXR as part of DirectX 12, however, it is also possible that AMD could bring a RTX counterpart including GPU. This would of course help that Raytracing in this Form does not degenerate into a niche product like PhysX.

3. CUDA vs Tensor + RT

A clear difference between the two technologies is that specialized computing units should be installed for RTX, whereas, as mentioned above, PhysX is directly adapted to the CUDA Cores, which are also used for graphics computation in ordinary games. PhysX is more flexible in this regard, as there is no wasted Silicon left in games without PhysX. The RT Cores, on the other hand, relieve the remaining units, but the performance loss with regard to the refresh rate is immense.

At the same time, there is the possibility that Raytracing might migrate to the CUDA Cores again, like PhysX, or this time also to the stream Processors, the AMD equivalent. We’ve seen Crytek present real-time Raytracing on a Vega 56. Since no executable application of the Demo is offered, I remain rather skeptical, however, even the PTGI Shader Mod for Minecraft runs surprisingly well without RT Cores despite alpha status, if you compare this with the RTX Version of Quake II. In addition, ray tracing (even worse) has been available on selected GTX cards for about half a year. NVIDIA emphasizes here always very strongly that ordinary GPUs are not suitable for this at all, similar and ultimately false claims we had however already with PhysX on the CPU.

Raytracing reflection as software solution IN Photo mode of TrackMania2.

In the meantime, we know about AMD that the next rDNA architecture, which is planned for 2020, is definitely Raytracing by Hardware. More details can only be speculated, but one of their patents made the rounds, which introduces a Texture Processor Based Ray Tracing Acceleration Method. This is a hybrid approach that uses hardware-based Fixed-function acceleration in the form of texture processors for the sophisticated envelope-cutting tests and traverses of raytracing. Those get corresponding instructions from the “ordinary” (i.e. also for shader units that can be used in classic games) and the calculated result will be returned to them. Compared to completely hardware-side solutions such as RT Cores, this has the advantage of using Cache and buffer more efficiently, Scheduling is easy to process on the software side, it takes up less resources on the Chip and retains the flexibility to control the calculations on the software side, while the performance advantage remains.

However, what results from this in practice and which path is ultimately most worthwhile must first be shown. Since even Intel will participate in the race next year with a dedicated GPU and Ray Tracing Accelerator, future RTX versions are likely to develop more dramatically and faster than PhysX. The Hardware of today could thus become useless in a year.

4. Leadplattform

As always, the consoles will be the focal point and perhaps also a damper. Console titles are aimed at the highest possible graphics quality per Performance (GPP – not GPP) and activated RTX unfortunately also ensures a very poor GPP. And even if it were desired, the next PlayStation and XBox Generation will rely on AMD Hardware. Likewise, there were no elaborate PhysX effects on the current models. As a gorgeous PC Gamer, you might want to be proud to say that you don’t care what components the “dirty” consoles are equipped with, but the majority of Blockbuster games sell on them. As the lead platform for development, you also make a decisive decision about the graphics splendor. As I said, exclusive PC Features are possible via partnerships, but the number of titles is still limited.

At Deus Ex: Mankind Divided, Pure Hair also made it into the versions for consoles. Competitive HairWorks, on the other hand, never existed.

There is relatively little information about the exact next-Gen specifications. In April we talked with Sony about Raytracing, unfortunately without going into detail. Instead, it was even more about the potential use of the technology for three-dimensional audio simulation for graphics. This left strong doubts whether you will really get to see reasonably reasonable Raytracing on the new PlayStation, especially since the last consoles at the time of release corresponded to the graphics performance of A at that time maximum 250€-expensive GPU and you do not get any RTX from it at the moment. During the writing of the article you are reading, however, there was new information. So it was actually confirmed that there is “ray-tracing acceleration in the GPU hardware”. Does this perhaps mean that Raytracing will soon finally be available without artifacts and on a decent scale At Reasonable Prices? Could AMD have been working on it in secret (their Patent was filed about two years ago) and just didn’t want to launch a half-cooked technology like NVIDIA early? (A)

This would probably be the desired scenario of every console farmer and PC Heinis who wants to upgrade in 2020. However, I think its occurrence is rather unlikely. A lot can change in half a year and I could well imagine that Sony in April just wasn’t sure if they really wanted to invest in Raytracing Hardware. A little more moderate would therefore be to assume that AMD is perhaps ready for the consoles next year to be able to calculate beams about as fast as an RTX 2060 gasoline, but needs less chip space and uses this much more cautiously and, for example, exclusively for camera-close shadows or selected mirrors. In the High-End area one would be equal to at least current NVIDIA Hardware. (B)

On the other hand, the hustle and bustle around RTX seems to be making much bigger waves than was the case with PhysX. Every game or Hardware asks if it supports Raytracing. Engine Demos, game modifications or postprocessing injections come with different types of radiation tracking. And even a 2010 game gets a Patch for a puny piece of Raytracing via Software. In the latter case, of course, I mean the small stuff from world of Tanks based on Intel’s oneAPI. So what if the advantages of the hybrid calculation are only marginal or even cause real problems and you still want to exploit the popularity of this one magic word without big hardware costs? Exactly-for example in Third-Person games tiny detail shadows for the main character or vehicle used, as is the case in WoT. Thanks to the appropriate Hardware, you save the otherwise necessary few percent performance (*cough* – yes, very little loss), but it is much more important that you can enchant potential buyers with the magic word in next-Gen Screenshots. The graphics should get better anyway and the average Noob will not care how many rays are really behind it.

Guess who developer Slightly Mad Studios worked with.

At the same time, this bleak future would mean for the PC that Nvidia’S RT Cores retain a (significant) lead. It could be similar to GameWorks, or tesselation. The Witcher 3 or Project CARS performed very poorly on AMD cards. In the racing game Reddit-User assumed PhysX as a malefactor, but this ran again for all cards only on the CPU. With Geralt and his hairy little friend (I think he called him Roach), however, they found out that unnecessarily extreme tesselation levels were used for Hair and FurWorks. This cost a lot of performance on Nvidia’s own models, the graphics cards of the competition suffered even more. In addition, the Kepler Chips in both titles ran relatively poorly compared to the current Generation at that time and so initially, for example, a GTX 960 beat the otherwise clearly superior GTX 780 predecessor”flagship” (in goose feet, since at that time the Ti madness started). The sense behind this is to look superior in Benchmarks, as many editors turn the graphic details to the Maximum. (C)

In that possible timeline, what keeps you from not pursuing something similar with the upcoming Ampere architecture? Simply install more RT Cores and “convince” game developers just to offer a binary ray tracing Option (completely on or nothing at all) – oh, and of course do not forget to put the price back up. The 2000 series could then be overwhelmed and praise the next Generation as a worthwhile Upgrade.

Post a Comment for "What Is Nvidia PhysX, What Does It Do?"